QoS (Quality of Service) Classification, Scheduler and Shaping

Table of Contents

Quality of service (QoS) is a set of technologies that manage network traffic to ensure that critical applications perform well, even when network capacity is limited.

SONiC enables critical data traffic prioritization, ensuring high-priority packets transfer ahead of other traffic and that your important data is not lost.

Limitations:

- QoS scheduling and shaping only work on physical interfaces, physical interface egress queues, and unicast queues. They don’t work on VLAN and port-channel interfaces.

- Intel Tofino does not support WRR scheduling.

- QoS scheduling default is WRR on the Broadcom platform, the weight of each queue is 1.

- There is no minimum bandwidth guarantee support for queue shaping on Broadcom and Intel switches.

- There are 8 unicast egress queues (UC0 to UC7) supported; multicast queues are not supported. Some platforms may display UC8 and UC9, but neither egress queue is supported

Example model & SONiC version:

- Aurora 830, Aurora 721, Aurora 621, Aurora 221

- Netberg SONiC: sonic-broadcom-202311-n0

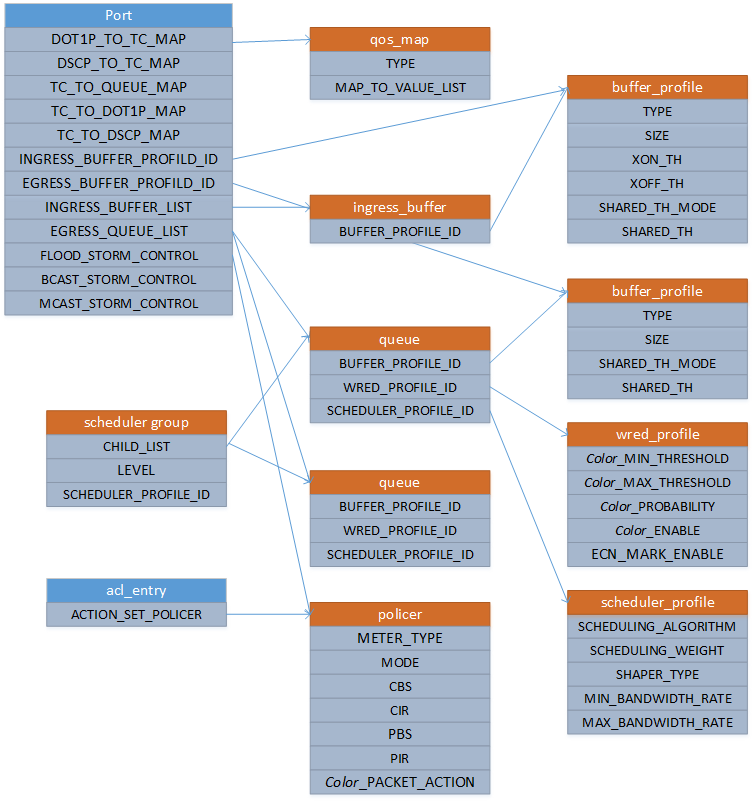

CONFIG_DB Tables

QoS uses the following tables in CONFIG_DB:

Table |

Description |

|---|---|

TC_TO_QUEUE_MAP |

In the egress direction, maps the traffic class to an egress queue. |

DSCP_TO_TC_MAP |

In the ingress direction, maps the DSCP field in an IP packet into a traffic class. By utilizing this table and the TC_TO_QUEUE_MAP table, a packet can be mapped from its DSCP field to the egress queue. In case there is a congestion in the egress queue, the back-pressure mechanism is triggered, and the PFC frame can be sent. The priority in the PFC frame is determined by TC to priority group mapping table. |

MAP_PFC_PRIORITY_TO_QUEUE |

Maps the priority in the received PFC frames to an egress queue so that the switch knows which egress to resume or pause. |

PFC_TO_PRIORITY_GROUP_MAP |

Maps PFC priority to a priority group (Intel only). |

TC_TO_PRIORITY_GROUP_MAP |

Maps the PFC priority to a priority group and enables the switch to set the PFC priority in xon/xoff frames in order to resume/pause corresponding egress queue at the link peer. |

PORT_QOS_MAP |

Defines the mappings adopted by a port or global, including: |

QUEUE |

Defines the mapping for each port/queue, including: |

SCHEDULER |

QOS scheduler profile attributes, including: |

WRED_PROFILE |

WRED and ECN profile, including: |

QoS Classification

QoS Classification assigns packets a Traffic Class (TC) value based on service requirements. QoS TC is the internal priority value in the switch (8 possible values) used for packet buffering and scheduling. TC-to-Priority-Group (PG) and TC-to-Queue maps depend on the TC maps to the ingress buffer (priority group) and egress queue, respectively. TC is a priority field for packet re-marking on an egress port. The TC determination is based on the DSCP field in the IP header.

SONiC supports Dot1p-to-TC mapping only for the “localhost” device type. In all other cases, DSCP-to-TC mapping will be used.

DSCP-to-TC Mapping

DSCP-to-TC Mapping assigns the TC using the DSCP field in the IP packet in the ingress direction.

Default values in SONiC:

DSCP | Traffic Class |

0-2 |

1 |

3 |

3 |

4 |

4 |

5 |

2 |

6, 7 |

1 |

8 |

0 |

9-45 |

1 |

46 |

5 |

47 |

1 |

48 |

6 |

49-63 |

1 |

TC-to-Queue Mapping

TC-to-Queue mapping assigns the egress Queue ID based on the Traffic Class.

Default values in SONiC:

TC | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

Queue |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

TC-to-Priority-Group Mapping

Maps the PFC priority to a priority group and enables the switch to set the PFC priority in xon/xoff frames in order to resume/pause corresponding egress queue at the link peer.

Default values in SONiC:

TC | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

PG |

0 |

0 |

0 |

3 |

4 |

0 |

0 |

7 |

PORT_QOS_MAP

This mapping defines QoS mappings adopted by a port.

Example:

"Ethernet0": {

"dscp_to_tc_map": "AZURE",

"pfc_enable": "3,4",

"pfc_to_queue_map": "AZURE",

"pfcwd_sw_enable": "3,4",

"tc_to_pg_map": "AZURE",

"tc_to_queue_map": "AZURE"

},

"Ethernet1": {

"dscp_to_tc_map": "AZURE",

"pfc_enable": "3,4",

"pfc_to_queue_map": "AZURE",

"pfcwd_sw_enable": "3,4",

"tc_to_pg_map": "AZURE",

"tc_to_queue_map": "AZURE"

},Every mapping goes under a name:

"DSCP_TO_TC_MAP": {

"AZURE": {

"0": "1",

"1": "1",

"10": "1",

...

"63": "1",

"7": "1",

"8": "0",

"9": "1"

}

},The PORT_QOS_MAP uses these names to adopt wholesome mappings to each port. Users can create custom maps to adopt.

QoS Scheduling

Quality of Service (QoS) scheduling and shaping features enable better service to certain traffic flows.

Queue scheduling provides preferential treatment of traffic classes mapped to specific egress queues. SONiC supports SP, WRR, and DWRR scheduling disciplines.

- SP (Strict Priority) – Higher priority egress queues get scheduled for transmission over lower priority queues.

- WRR (Weighted Round Robin) – Egress queues receive bandwidth proportional to the configured weight. The scheduling granularity is per packet which causes large and small packets to be treated the same. Flows with large packets have an advantage over flows with smaller packets.

- DWRR (Deficit Weighted Round Robin) – Similar to WRR but uses deficit counter scheduling granularity to account for packet size variations and provide a more accurate proportion of bandwidth.

Scalability

The maximum number of QoS scheduling and shaping profiles is specific to the ASIC vendor’s SAI support.

Scaling limits:

Name | Scaling value |

Number of interfaces |

max physical ports |

Number of queues |

max egress queues |

Number of sched profiles |

128 |

Default Configuration

SONiC will generate two default schedulers

"SCHEDULER": {

"scheduler.0": {

"type": "DWRR",

"weight": "14"

},

"scheduler.1": {

"type": "DWRR",

"weight": "15"

}

},Attaching Schedulers

The QUEUE table attaches schedulers to each port/queue.

"Ethernet0|0": {

"scheduler": "scheduler.0"

},

"Ethernet0|1": {

"scheduler": "scheduler.0"

},

"Ethernet0|2": {

"scheduler": "scheduler.0"

},

"Ethernet0|3": {

"scheduler": "scheduler.1",

"wred_profile": "AZURE_LOSSLESS"

},

"Ethernet0|4": {

"scheduler": "scheduler.1",

"wred_profile": "AZURE_LOSSLESS"

},

"Ethernet0|5": {

"scheduler": "scheduler.0"

},

"Ethernet0|6": {

"scheduler": "scheduler.0"

},Queues 3 and 4 are PFC-enabled in this example. They also have a WRED profile attached.

QoS Shaping

Queue shaping in SONiC provides control of the maximum bandwidth available per egress queue for more effective bandwidth utilization. Egress queues that exceed an average transmission rate beyond the shaper’s max bandwidth won’t be serviced. Incoming ingress traffic will be stored on the egress queue until the queue size is exceeded, resulting in a tail drop.

This feature relies on the aforementioned QoS Scheduling capabilities.

Examples

Port “Ethernet52” egress queues 0 through 5 will be limited to 10Gbps each (1.25 GB/sec)

{

"SCHEDULER": {

"scheduler.queue": {

"meter_type": "bytes",

"pir": "1250000000",

"pbs": "8192"

}

},

"QUEUE": {

"Ethernet52|0-5": {

"scheduler": "[SCHEDULER|scheduler.queue]"

}

}

}Port “Ethernet52” will be limited to 8Gbps (1 GB/sec)

{

"SCHEDULER": {

"scheduler.port": {

"meter_type": "bytes",

"pir": "1000000000",

"pbs": "8192"

}

},

"PORT_QOS_MAP": {

"Ethernet52": {

"scheduler": "[SCHEDULER|scheduler.port]"

}

}

}NEWS

Latest news

Taoyuan, Taiwan Effective July 7, 2025: The following products are now End of Life (EOL): Aurora 750, Aurora 710, and Aurora 610.

Taoyuan, Taiwan, 29th of April 2025. The latest release of SONiC 202411.n0 – an enterprise distribution of SONiC by Netberg – introduces new features and enhancements tailored to improve performance in data center, edge, and campus environments.

Taoyuan, Taiwan, 20th of January 2025. Netberg, the leading provider of open networking solutions, announces support of Ubuntu 24.04 Noble Numbat on its Broadcom-enabled portfolio.

Taoyuan city, Taiwan, 24th of June 2024. Netberg announced the new Aurora 721 100G and Aurora 421 10G switches, which feature programmable pipelines powered by Broadcom StrataXGS® Trident3 Ethernet switch chips.

Taoyuan city, Taiwan, January 24th, 2024. Netberg announced the release of two new models powered by the Broadcom StrataXGS® Trident3 series , the Netberg Aurora 221 1G switch and Aurora 621 25G switch.

Effective January 12, 2024: The following products are now End of Life (EOL) - Aurora 720 and Aurora 620.